Canvas animations on maps

Earlier this month, I discussed techniques for animation with canvas, sketching out how you can simulate motion and produce graphics, videos, and GIFs. You can draw anything with Canvas's primitives - lines, polygons, images, text - but I didn't explain how to do maps. Maps are just glorified charts, but you'll need the right tools and concepts to create them in Canvas. Let's see how.

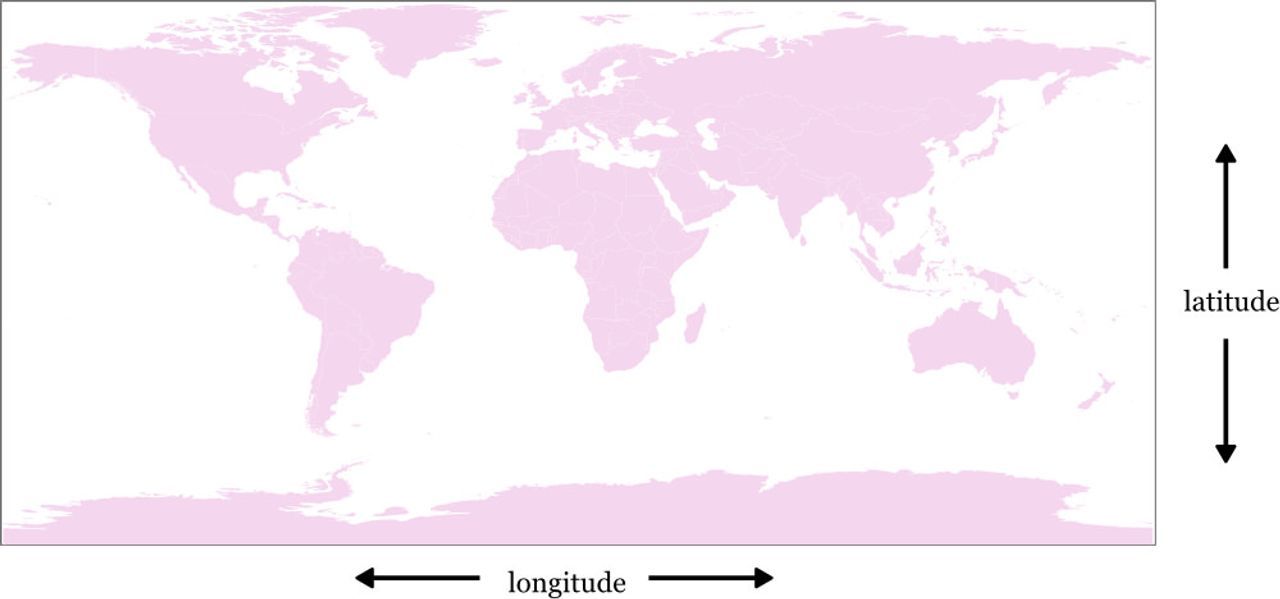

The literal projection

What cartographers term the equirectangular projection is the most literal transform from coordinates as we typically record them to an image. Longitude and latitude are numbers from -180 to 180 and -90 to 90 respectively, and images are plotted as pixels from 0 to width and 0 to height.

The equirectangular projection requires only arithmetic: to project longitude and latitude values onto a 360x180 canvas, you would write

// longitude and latitude onto a 360x180 canvas

var x = longitude + 180;

var y = 90 - latitude;

// scaling them up to fit a 640x360 canvas

var x = (longitude + 180) * 2;

var y = (90 - latitude) * 2;

// to pan the map, you can add to one of the numbers

var x = (longitude + 180 + 50) * 2;

var y = (90 - latitude) * 2;The equirectangular projection doesn't have much cartographic value: mostly it's popular because it's incredibly simple. This is how I created a video of the centers of all OpenStreetMap edits:

You might notice that that video starts from nothing: there's no basemap under the points. This is because most maps you'll see on the internet aren't in the equirectangular projection, so I don't have many go-to sources for tiles. On the satellite side, you can find plenty of NASA composite images like Blue Marble that are in equirectangular, but Mapbox, OpenStreetMap, and the vast majority of other mapping services are available only in the Spherical Mercator projection.

Spherical mercator

To get more context in your canvas geo-visualizations, you'll want an actual map behind it. Let's review the tools of the trade.

- node-sphericalmercator implements the transformation between spherical mercator and WGS84 (longitude & latitude)

- geo-viewport transforms bounding boxes into zoom + centerpoint combinations

For Python fans, mercantile solves the same problems as node-sphericalmercator.

For projections in general, proj4 and proj4js are the comprehensive solutions and d3 projections are also very strong. But the transform from spherical mercator to and from longitude & longitude is one of the simplest possible, so using a lightweight, purpose-built tool like node-sphericalmercator makes a lot of sense.

- We'll need a dataset and a basemap

- To frame the visualization, choose a geograhical extent and image size

- Then decide how the animation will look across time

I'll use the NYC Taxi Trips dataset famously liberated by Chris Whong.

To determine the desired geographical extent, you can either find the actual extent of the data, or choose one yourself. I tend to do the latter, since most datasets have outliers - one taxi that drives out to New Jersey shouldn't mean that your map should be state-level rather than city-level.

Okay, so the taxi dataset is a really big CSV file. I'll be doing this in node as usual. First thing is to set up CSV parsing and file reading: with a file this large, use a streaming parser so that you never hold gigabytes of data in memory.

Make a directory to play around in:

mkdir taxi-map

cd taxi-map

Initialize a package.json file. This makes your experiment replicable: with the dependencies recorded in this file, others can easily run npm install and get the same software you used.

npm init .

Install the csv-parser module. There are lots of fancy CSV parsers: this is a pretty good one.

npm install --save csv-parser

Then start writing draw.js:

var fs = require('fs'),

csv = require('csv-parser');

fs.createReadStream('trip_data_1.csv')

.pipe(csv())

.on('data', function(data) {

console.log('row', data);

});Run node draw.js to make sure that the CSV is being parsed properly. A row looks like:

{ medallion: '1CF8717030F447204CCBD67F812CD426',

hack_license: '5292D80EDB27399CEDA641A9241593DF',

vendor_id: 'VTS',

rate_code: '1',

store_and_fwd_flag: '',

pickup_datetime: '2013-01-13 10:38:00',

dropoff_datetime: '2013-01-13 10:39:00',

passenger_count: '1',

trip_time_in_secs: '60',

trip_distance: '.26',

pickup_longitude: '-74.002815',

pickup_latitude: '40.749241',

dropoff_longitude: '-74.002258',

dropoff_latitude: '40.751831' }Keeping this simple, we'll only pay attention to the pickup location, at least at first. The 's around it mean that it's being parsed as a string. So we'll use parseFloat to make it a number before doing anything else.

Using a static map for a background

Let's pull up and get that extent in place. I use geojson.io for this sort of thing: zoom into the map and draw a box around New York. Then go to Meta -> Add bboxes to add a bbox property to each feature. For the box I drew, that adds:

"bbox": [

-74.09111022949219,

40.60456943720527,

-73.76014709472656,

40.8626410807892

]

geo-viewport gives you the magic code to figure out, for a bounding box and desired pixel bounding box, what zoom level & centerpoint will properly contain the bounding box.

Install geo-viewport

npm install --save geo-viewport

And then create a file download_map.js:

var geoViewport = require('geo-viewport');

console.log(geoViewport.viewport([

-74.09111022949219,

40.60456943720527,

-73.76014709472656,

40.8626410807892

], [640, 640]));This outputs

{ center: [ -73.92562866210938, 40.73360525899724 ], zoom: 11 }Okay: let's get a map there. I'll use the Mapbox Static API for this. Since I'm only doing it once, I'll use Katy Decorah's Static Map Maker and plug in the numbers from above.

Since this map includes OpenStreetMap data and Mapbox design, it'll need that © Mapbox © OpenStreetMap attribution anywhere it goes.

So I'll download the static map and call it background.png. Let's start drawing points.

Projecting points on a static map

First we'll just get this background.png image to pass through node-canvas, the library that I covered in the last post about animation.

Install node-canvas:

npm install --save canvas

And comment out the main bit of the draw.js script, but start initializing a canvas that's 640x640 to fit the image, draw the image on it, and then save it to a file.

var fs = require('fs'),

Canvas = require('canvas');

var canvas = new Canvas(640, 640);

var ctx = canvas.getContext('2d');

var background = new Canvas.Image();

background.src = fs.readFileSync('./background.png');

ctx.drawImage(background, 0, 0);

fs.writeFileSync('frame.png', canvas.toBuffer());Now it's time to combine the background layer with our datasource. At this point you know there's a 640x640 image with a zoom level and centerpoint: this is enough to calculate where a given longitude, latitude point should fall.

This is where node-sphericalmercator comes in: the function you'll use is called px: it translates longitude, latitude, and zoom into pixel coordinates: x & y.

Maps are often thought of in tile coordinates, rather than pixel coordinates. The two are basically interchangeable: tile coordinates are pixel coordinates divided by 256, and vice-versa.

Install sphericalmercator: npm install sphericalmercator.

Create a new projection instance:

var projection = new (require('sphericalmercator'))();So, for instance: at zoom level 0, 0°, 0° is halfway in the middle of a single tile of 256x256px. -180°, 85° is in the top-left corner and 180°, -85° is in the bottom-left.

> projection.px([0, 0], 0)

[ 128, 128 ]

> projection.px([-180, 85], 0)

[ 0, 0 ]

> projection.px([180, -85], 0)

[ 256, 256 ]

So we have a projection, a static map, and a bunch of data at different longitude, latitude positions. To figure out where in the image to draw the data, let's figure out the pixel location of its center, and then the pixel location of its top-left corner, which we'll call the 'origin', and then for each data point we can find their pixel locations, subtract the origin, and have a proper relative value.

var center = projection.px([

-73.92562866210938,

40.73360525899724], 11);

// The image is 640x640, so to get from the center

// to the top-left, we go up by half the width & height

var origin = [

center[0] - 320,

center[1] - 320

];Now that we have the origin value, we can create a function that gives pixel values relative to the static map, rather than the top-left corner of the entire world.

function positionToPixel(coord) {

var px = projection.px(coord, 11);

return [

px[0] - origin[0],

px[1] - origin[1]

];

}positionToPixel will transform longitude, latitude points to pixel coordinates used in node-canvas. Consult the previous post for the details of the leftpad module: let's just dive into animation.

ctx.fillStyle = '#84014b';

var frame = 0, perFrame = 100;

fs.createReadStream('trip_data_1.csv')

.pipe(csv())

.on('data', function(data) {

var pixel = positionToPixel([

parseFloat(data.pickup_longitude),

parseFloat(data.pickup_latitude)]);

ctx.fillRect(pixel[0], pixel[1], 2, 2);

frame++;

if (frame > 0 && frame % perFrame === 0) {

// draw an image every time we've drawn 100 points.

fs.writeFileSync('frames/' + leftpad(frame / perFrame, 5) + '.png',

canvas.toBuffer());

// and then draw, at a low opacity, the original static

// map over it. this makes the points fade out.

ctx.globalAlpha = 0.4;

ctx.drawImage(background, 0, 0);

ctx.globalAlpha = 1;

}

});Run this for a while, and frames will be generated in the frames directory, and then use ffpmeg to combine them into a video, and you'll get:

Onwards

The full source code for this example is on GitHub: feel free to use it and the concepts introduced in any way you'd like.

@macwright.com on Bluesky, @tmcw@mastodon.social on Mastodon