Remember the winter

In San Francisco, and to a lesser extent the world, Machine Learning is a two-word explanation for how the future will be better. Startups will succeed because they leverage machine learning. Your money will be managed better, and your alarm system will be able to detect and categorize motion because of machine learning. Machine learning is the reason why companies can claim higher valuations and why we shouldn't worry about self-driving cars deciding to drive off the highway.

I share much of this optimism, but think there's more to the story. Like any hot trend, the fervor behind machine learning lacks context - especially historical context, and an understanding of the dynamics at work to make us optimistic about technology in general.

- Optimism about AI may be cyclical

- Technological progress is as much a result as it is a cause of hype cycles. There are three other components that all are critical for keeping the cycle alive:

- Benefactors

- Expectations

- Speculative pressure

Let's dive in.

Optimism about AI has been cyclical

In the history of artificial intelligence, an AI winter is a period of reduced funding and interest in artificial intelligence research. The term was coined by analogy to the idea of a nuclear winter. The field has experienced several hype cycles, followed by disappointment and criticism, followed by funding cuts, followed by renewed interest years or decades later.

If you aren't familiar with the concept of AI winter, I'd recommend it. It's an idea that AI funding and optimism is cyclical. The winters were roughly around 1966, 1969, 1974, and 1987 - years when companies went out of business (Symbolics) or critical reports were filed. After the initial crash, AI became a forgotten subject - a field with scant support in academia or industry.

So far I haven't met anyone who had heard of the AI winter before, despite it being a well-established historical idea. We've forgotten how often we've fallen short of our plans. It's hard to imagine what it'd be like in 1968 when the Mother of all demos occurred. Surely, at that rate of progress, anything seemed possible. Imagine fast-forwarding to the MS-DOS era, and wondering if you had gone backwards or forwards in time. Time is a flat circle.

I should probably reiterate that this blog post isn't a bet against AI. The AI winter may well be over permanently, and we all could live the remainder of our lives performing manual labor for cruel digital androids. When that happens, you'll be free to mock me for my pessimism (or was it optimism), and I'll cede the point, but it won't be of much use by then.

Now, why has AI been cyclical? I'll go over some reasons why it, like any technology trend, is volatile, but why it's cyclical, well: as best as I can describe it, we keep extrapolating the future from early results, and we keep thinking that we're on a path to strong AI, even when we're only solving a fraction of that problem.

Strong AI is, well - artificial general intelligence. Like the kind of general intelligence that humans and other organisms have, with its non-linear thought and tricky ways of reasoning. It's hard, really hard. And I'd expect some of the champions of AI to claim, well - we aren't really aiming for that. But are we? The framing of current AI is clearly human, with human voices and names.

The past cycles ended when we realized that we weren't on a track to general intelligence, and that the fashionable next-generation technology had clear and crippling limitations. That, combined with centralized funding sources meant that the industry was fragile and could be knocked off-balance by a few damaging reports. And, of course, a breakdown in consumer sentiment and trust: a shift back to thinking that, no, computers were not about to become our friends. The actual 'AI' that shipped to consumers was disappointing and convinced people that computers were still more like calculators than organisms.

1. Benefactors

A lot of groundbreaking research in technology is funded either by a monopoly or the government.

Engelbart's work was funded by DARPA. So was the Internet. Unix was funded by Bell Labs in the 1970s - before Bell Labs was broken up, because of monopoly dealings.

As of now, Google funds a large portion of the hit computer science papers - like The Case for Learned Index Structures. Amazon's doing much of the cutting-edge work in voice recognition, and Apple's teams are at the bleeding edge of on-device machine learning.

All those companies are tarred as monopolies. There are calls to 'break them up'. They're cheating on their taxes, dominating entire markets, and getting massive fines for breaking anti-monopoly rules.

Fiction celebrates the 'Project X' efforts of monopolies: look at Tony Stark (Iron Man), Bruce Wayne (Batman), or Lex Luthor. And, heck, it's cool to look at what was created in the days of excess at Bell Labs, projects like Plan 9. But these high-budget long-shot projects were enabled by the kind of margins you collect as an entrenched monopoly, and ended when the monopolies ended.

As much as it's cool that the domination of Amazon gives us zany projects like an enormous clock built into the side of a mountain and rocket company, one has to ask: what would have happened if there wasn't that much slack in the market, so to speak? And what might happen to these playboy billionaire dreams if the SEC wakes up?

And the flipside of huge benefactors is a lack of diversity in funding. The era when a vast majority of AI funding originated from the government was probably the peak of funding concentration, but what we have today isn't all that different - a few nation-state-sized companies, many investors that share the same general thinking, and then a smattering of small companies that rely on those investors. Concentrated sources of investment mean that only a few people have to change their minds in order to tank an entire industry.

2. Expectations

We expect Siri to work. In the early days of Siri, when people who start human-sounding conversations with Siri - 'Hey Siri, what do you think about them forty-niners?' and be surprised by Siri's dumbfounded reply. We were ready to believe that in world with barely-working infrastructure, egregious privacy & security problems - we were ready to believe that humanoids were real.

In part, this is because consumer approval of Apple was at such a high level.

And partly because our memory is so short. We had Ask.com in 1996, but it didn't live up to the stated goal of answering questions, in the general sense. We had Clippy, but hated it. Dragon NaturallySpeaking was pretty decent in 1997.

Anyway, 6 years after the release of Siri, voice recognition has improved greatly, and so has artificial intelligence. I'd also wager that some of the uptick in Siri-like interfaces is because we've started to accommodate the machine: cued by marketing material and conversations with friends, we now have a pretty clear idea of what Siri, Google, or Cortana can and can't do. We know the questions to ask. We still believe in super-intelligence, but when talking to computers, we avoid sensitive topics that'd embarrass both of us.

At the edges, there are whispers about the downsides. That AI can be racist. That even highly-lauded algorithms, like Google Translate, have no 'common sense'.. That traditional ideas of debugging have no meaning in AI - we can visualize the mechanism, but only at a high level of abstraction, and diagnosing or precluding behaviors is exceedingly difficult.

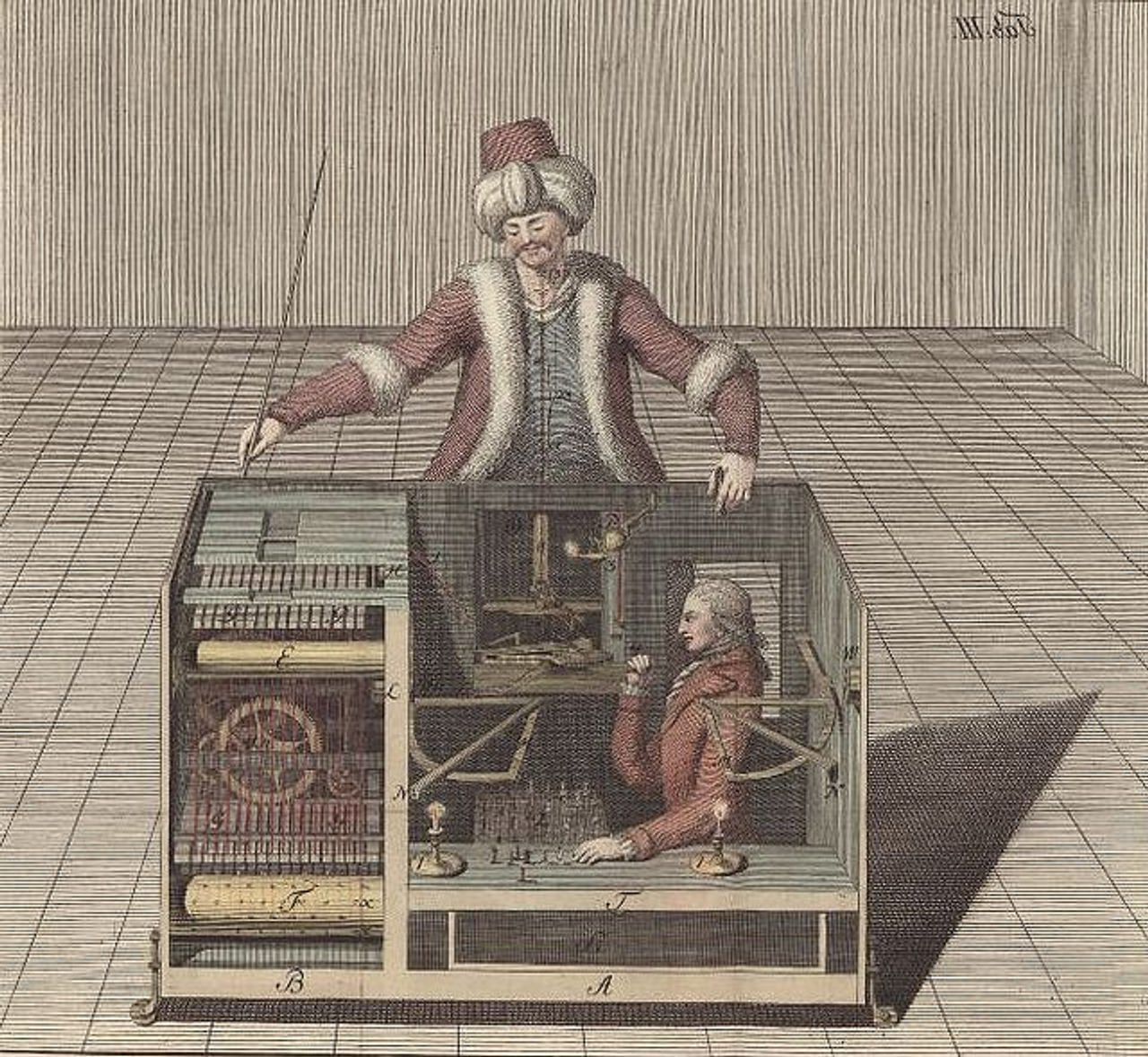

The funniest example is Facebook's M personal assistant, which unintentionally recreated the original Turk - heavily hyped at its time of release - it would use conditional random fields and maximum entropy classifiers in tandem with a team of humans, and then artificial intelligence (es?) that would listen in and learn from the conversations. Eventually it'd just be artificial intelligence, and Facebook would be able to fire the contract workers they initially employed to train the robots.

But, well, they didn't - it only reached 30% automation. Its users likely optimistically believed that they were talking to androids, but they were only talking to contract workers. It was harder than they expected. If you look around, there are other big misses, like IBM Watson, which was a true marketing home run, delivered a tiny fraction of its touted abilities.

3. Speculative pressure

This one's possibly a bit insider baseball, but, well - startup trends are as much a result of the funding environment as anything else. Big investors see a trend, or they see one company hit a 'home run', and they want that again. There's a WeChat or a Google or a Facebook, and, though that company is doing great, maybe they discovered a new market, and there's more room at the edges? What about the startup that does almost the same thing, but just for the enterprise market, or just for the teens?

These trends flow in and out quickly. Chatbots were big in the recent past. Social was a big keyword, as was location. Apps were born in the Foursquare-adjacent era, and they're gone, or they've pivoted. Startups -- despite their reputation for doing everything fast -- aren't made that quickly, so they soft-pivot. If they use simple linear regression, that's enough to add 'machine learning' to a PowerPoint pitch. So quickly we get applications that leverage machine learning to optimize their dog-walking routes or bouquet selection.

Like M, maybe they really are, or maybe it's a little bayesian math and a lot of contract employees.

End/FAQ

Perhaps the winter is over forever:

“At the same time, I don’t think there will be an ‘AI winter’ again, where hype cycles happen and there’s no more funding, and so on, because AI is now running in production,” Richard Socher said during an onstage interview at a Bloomberg event today. “Google search and social media, and now enterprises, are using AI on an ongoing basis — they’re seeing great returns on those investments.”

“There’s definitely hype,” adds Ng, “but I think there’s such a strong underlying driver of real value that it won’t crash like it did in previous years.”

Some of the recent boom in AI/ML has been the result of genuine innovation. Neural networks, tensors, and increasing efficiency hardware efficiency mean that artificial intelligence is concretely better today than it was in the recent past.

But too often we're drawing a straight line from where we are to the utopian future, and assuming that future is right around the corner. What looks like a technological revolution right now is also a lucky alignment of economics and consumer attitudes, all of which are fickle and constantly changing.

What might happen is that machine learning succeeds despite the public's lack of trust, and it becomes an antagonist. Products will advertise that they're curated by humans, or at least that there are human moderators. That'll become a selling point, that there is common sense that catches self-reinforcing evil cycles in feed-organization and other mechanisms. Companies use machine learning behind the scenes, but it disappears from their product marketing.

Or perhaps what we're seeing right now is the validation / invalidation of the technology in different domains. It'll succeed in recognition and categorization tasks to the point that programmers will be able to call a detect() routine and it 'just works.' We'll gradually forget it was ever a hot trend, and just rely on that part of computing being fixed. But we'll also forget about the hype that this was going to solve everything, and the rest of our technology landscape will look pretty much the same.

Or, maybe, it'll all just work out. I kind of like Umer Mansoor's take the best:

AI is not magic and the hype will die down, but the next AI winter will be more like a California winter, not a Canadian one.

- You're so wrong! I might be! In some ways I hope I am - I hope the good things about AI come true. But I don't want to be right or wrong here - predicting the future is pretty dumb. I'm just trying to remember the past and see a more sophisticated narrative than tech futurism currently permits.

- You're really mixing AI and ML as terms. They have dictionary definitions! How could you? The terms are conflated in common usage.

- You have X factually wrong. Let me know! I'm always there at @tmcw on Twitter or my email on /about.

@macwright.com on Bluesky, @tmcw@mastodon.social on Mastodon