Linkrot

One of my hobbies is making sure that macwright.org is a pretty good website, in the traditional sense of the internet. It should be fast, reliable, and simple. Should work on phones. I shouldn't break links and change URLs.

However, it exists in a network. I've linked to about 4,000 different URLs from 300+ blog posts. Those links go all over, from YouTube videos to indie blogs just like this one. And they keep changing.

This was one of the things I noticed when I did a content update a few years ago: a lot of the things I was referring to in 2011 are offline now. So I want to keep my corner of the network well-connected.

This project sat on the TODO list for a long time, partly because I decided it should be a GitHub app that would automatically propose Pull Requests and would replace dead links with Archive.org references. I kept getting 10% into that task and losing momentum by the sheer inanity of implementing third-party apps on really any platform.

So this is less a post about a cool tool you can use (though you can) and more about the kind of semi-bespoke tools that I tend to make nowadays.

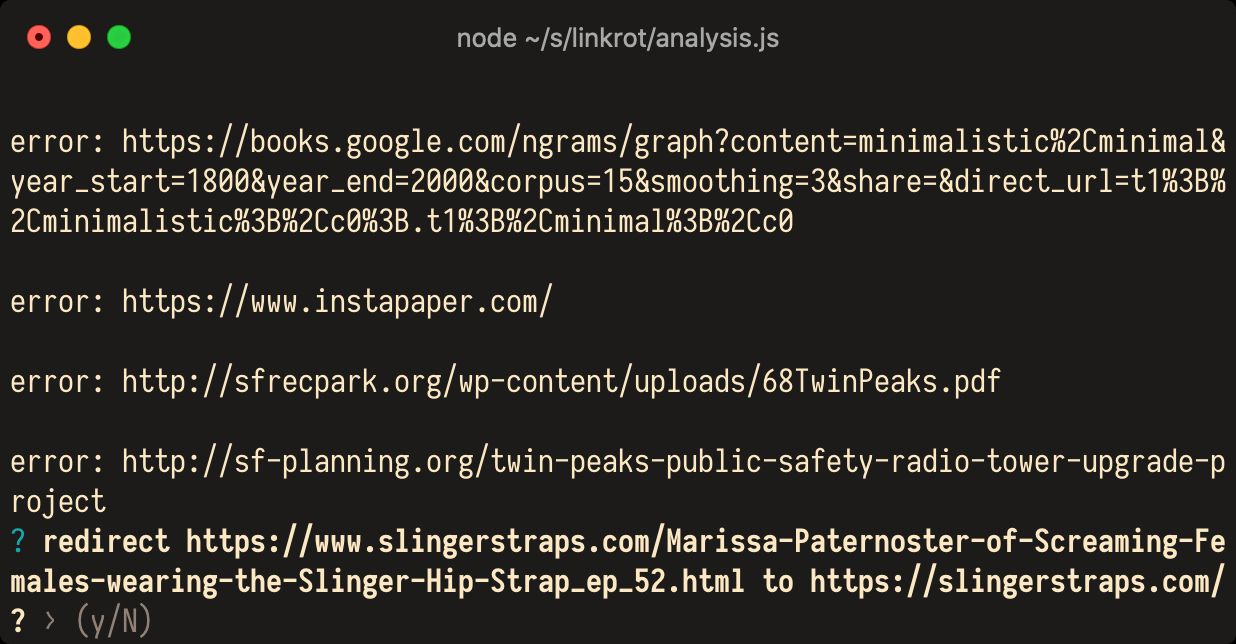

So, I wrote this thing called linkrot. It parses the Markdown source for every blog post on macwright.org, compiles all the links, and runs as a CLI. It automatically fixes links that are upgraded from HTTP to HTTPS, and asks to fix links that are now redirecting between discrete locations.

I have some tools that I really like for this kind of task.

Remark

I've written about remark before. It's a Markdown parser & formatter that, unlike mot parsers, lets you interact with, query, and manipulate Markdown structure in much the same way as you could interact with an HTML DOM. I use remark to search for links in a document and to get the precise start & end positions of their URLs.

const text = fs.readFileSync(filename, "utf8");

const remark = Remark().use(frontmatter, "yaml");

const ast = remark.parse(text);

// returns an array of all the parsed links

const links = selectAll("link", ast);Remark saves you the danger and frustration of using regular expressions for this kind of task. I strongly considered writing this tool in a language other than JavaScript for fun & education (maybe Go, finally?) but, as far as I can tell, there's no Remark equivalent for any other language.

MagicString

The other special ingredient is MagicString, which I've used in nearly every project that includes transforming Markdown. MagicString solves the headaches of modifying strings by fixing indexes. From my initial step of parsing Markdown, finding URLs, and figuring out which URLs need to be modified, I'm left with a bunch of start & end indexes for where those URLs are in the file, and the URLs that they need to become. If I were to replace the first URL with something else, that would shift all of the text after it, making all those indexes invalid. I could go in backwards order so that the indexes don't change but now we're talking about the level of nitpicky bookkeeping that would make this a chore.

link.url = b;

s.overwrite(

link.position.start.offset,

link.position.end.offset,

remark.stringify(link)

);MagicString takes care of all that: you can refer to indexes in the original string, not worrying about moving them around by changing that string incrementally.

The fantastic thing about MagicString is that it lets you make truly minimal modifications. I just want to replace links in my posts: I don't want to re-stringify all my Markdown and modify other details of the files. MagicString lets you be precise and keep those Git diffs tidy.

Linkrot works pretty well already for me, and I'm not trying to make it a big, reusable project at this point. So, like small projects like bespoke and premature-optimizer, I'm open sourcing it as a reference point and for the possibility that it maybe works for you too.

There's a lot more to do: I haven't implemented archive.org relinking yet because I've had trouble with their API. And my current approach of testing whether a URL is "up, down, or redirecting" doesn't cover all the cases: some websites don't respond to HEAD requests, and others redirect using HTML 'refresh' tags. Suprisingly, amzn.to, Amazon's shortener, uses Bit.ly, and Bit.ly uses HTML redirects so I can't automatically resolve them right now.

There's also the question of caching. I've implemented a barebones system that saves a JSON file that you save in Git and which records the timestamp of when you list tested a given URL. This lets the tool skip re-checking work, but it doesn't yet cache anything about URLs with errors or redirecting URLs that you choose to ignore.

I've fixed a few hundred outgoing links so far and plan to get archive.org relinking working, one of these days. linkrot is installable on npm (@tmcw/linkrot) and the source is up on GitHub.