Two Wishes for Dev Tooling

A few days ago, Simon Willison wrote a great post about PAGNIs, inspired by another post about YAGNI exceptions. I thought both were great, especially the bits about developer tooling. I've set up certain parts of tooling for teams multiple times - continuous integration, unit tests, code quality tools, continuous deployment - and every time it has been positive ROI, even counting the tears shed trying to keep front-end continuous-integration tests running.

But it got me thinking about two parts of developer tooling that aren't widespread and feel like they could be a lot better: one that exists but just isn't that popular anymore, and the other that doesn't really exist but I think it should. Here goes.

Nonbinary code quality

My projects typically use two tools that check code correctness and quality: linting, and tests. Linting detects a combination code style and quality issues, while testing detects the code's ability to run, produce the correct result, and not crash. I use eslint, an absolutely lovely piece of open source technology, for linting. The way you configure eslint is something like this:

{

"react/react-in-jsx-scope": "warn",

"react/display-name": "error",

}This example would warn if you haven't imported React before using JSX, but exit with an error if you’ve forgotten to define a component in a way that sets .displayName to a good value.

When you run eslint locally, you can get a nice list of warnings and errors:

$ eslint --ignore-path .gitignore --ext .js,.ts,.tsx .

/example/list_box_popup.tsx

18:5 warning 'shouldUseVirtualFocus' is assigned a value

but never used @typescript-eslint/no-unused-vars

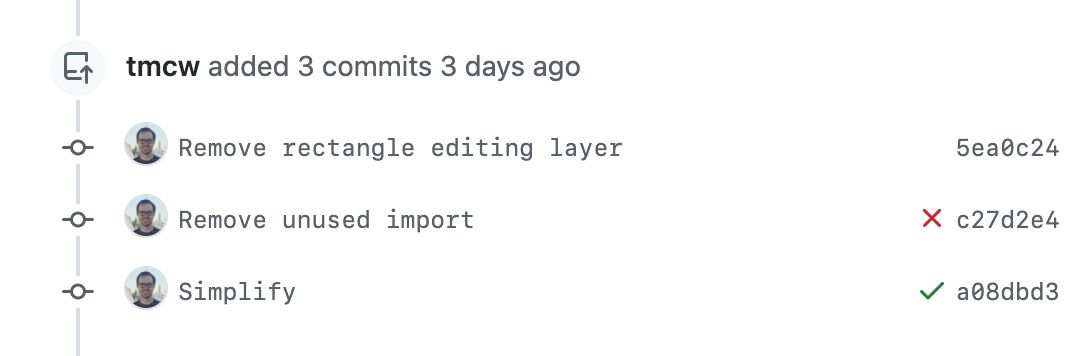

But if you run eslint in GitHub actions, the main thing you see is simply whether there were errors or not:

Now this, of course, is pretty useful, and a vast improvement from how things worked on GitHub before they launched the Commit Status API in 2012 or GitHub Actions in 2019.

But still, we could go farther. In particular, a generalized version of CodeClimate.

CodeClimate is wildly popular with the Ruby on Rails set, and less so with other programming cultures. Its analysis for Ruby is world-class. Its analysis for TypeScript is pretty good - still extremely useful - but it just hasn't caught on as much.

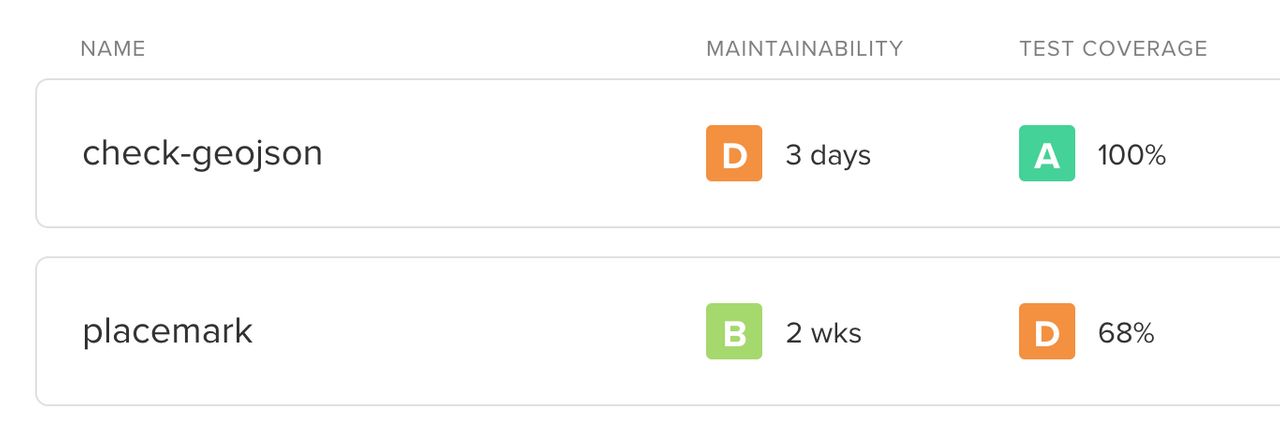

What CodeClimate does differently is that it doesn't give you a Pass or Fail for code quality: it gives you scores, percentages, and their change over time.

Code Climate gives you charts of technical debt, warns you about duplicated code, shows code test coverage statistics. But it's not just that it measures these things, but the way it changes the decision-making apparatus.

Traditional bureaucratic software engineering philosophy is to make continuous integration the arbiter of whether something can be merged (along with, of course, serious review by a human). When it comes to code quality, this means that a lot of code quality metrics that are nice to have are either treated as warnings, and allowed to multiply indefinitely, or marked as errors, which they make the GitHub Actions run fail, even when you really actually need to ship a feature and it doesn't matter that much whether your React lifecycle methods are in a particular order or you used single or double quotes.

But reality doesn't work like this. Projects that aim for code quality naturally slip from time to time. Tools that give you a grade open a third option: to see that a PR is adding technical debt, but accept it for now. And then to have a way to quantify efforts to clean up that debt.

Code Climate is my example because it exists, but there's another area that I am dying for nonbinary tools, ones that go beyond Pass or Fail. And that is performance.

Both runtime performance and build size are things that you should measure as much as you can, and where tools for measuring performance are exploding in popularity, so it's pretty likely that you'll be adding one to your stack. And this is where a non-Pass/Fail metric would be super nice.

For runtime performance, for nearly every project that has a strong focus on performance, there are always going to be cases where you accept performance degradation in exchange for something. If you're developing a game and you start out with a blank WebGL canvas, the moment you render a title screen: performance will suffer. But when someone is doing a refactor that should have no effect on performance, you should make sure it has no effect. When someone is doing a refactor that improves performance, you should see the percentage improvement so you can congratulate them and celebrate.

Quotas are a fairly weak solution to this. Sure, it's bad for a front-end app to ship a 400kb bundle, but it's also bad for a 50kb app to accidentally add another 50kb of code by including some heavyweight dependency, but not noticing because it's still under the limit. The ideal solution is something more like change management, comparing against weighted historical averages, not simply assigning a pass or fail.

A great system for this kind of metric could be configurable - so you could send benchmark results to it and see a chart of performance over time, but also have a GitHub Actions-like interface for sharing configurations. And for public repos, projects like are we fast yet and are we fast yet (for rust) show how this sort of metric is addictive and satisfying.

Bot refactors

Basically every project I've been on in the last few years has used prettier, another remarkably great open source tool that automatically reformats code according to a standard. When I'm in a different language, I use a different tool that does the same thing.

The pattern with prettier is that I run it in vim, using coc.vim, so it reforms code on save. (I suspect there are many other, possibly better ways to do it, but that one works for me) Then to make sure, the husky module registers a git commit hook, which runs over my files, makes sure they're formatted with prettier, and pretty-quick then runs prettier on them.

It's not a bad system, but it is a weird one. Weird particularly because it means that individual members of a team are running tools that depend on their editor setups, and that try to catch code at the last minute before it goes to GitHub. If a non-prettified bit of code makes it onto GitHub, chaos ensues. If you vendor a dependency, you'll either have to make an exception for it in your prettier configuration, or the first commit will be a messy rewrite of the file as prettier does its magic to reformat someone else's house style.

So prettier is an example, but the bigger thing that I'm getting at is the concept of bot refactors. Could GitHub Actions run prettier without chaos? The moonshot here is, of course, automated refactoring. GitHub Copilot running on your existing code, creating refactored variants and creating PRs for refactors that pass tests. Dependabot not just updating your dependencies, but running codemods to adapt your code to new APIs. And some commits could be identifiable as whitespace-only or syntax-only, to keep the focus on functional change.